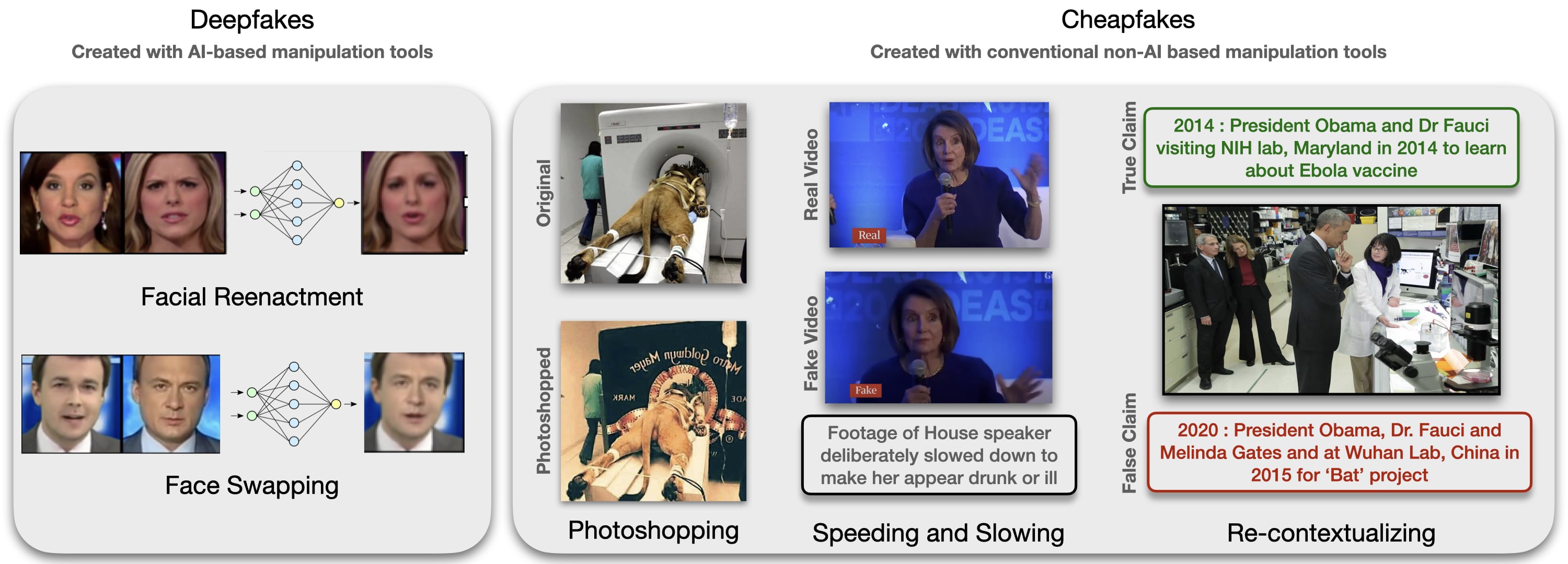

Cheapfake is a recently coined term that encompasses non-AI ("cheap") manipulations of multimedia content. Cheapfakes are known to be more prevalent than deepfakes. Cheapfake media can be created using editing software for image/video manipulations, or even without using any software, by simply altering the context of an image/video by sharing the media alongside misleading claims. This alteration of context is referred to as out-of-context (OOC) misuse of media. OOC media is much harder to detect than fake media, since the images and videos are not tampered. In this challenge, we focus on detecting OOC images, and more specifically the misuse of real photographs with conflicting image captions in news items. The aim of this challenge is to develop and benchmark models that can be used to detect whether given samples (news image and associated captions) are OOC, based on the recently compiled COSMOS dataset.

An image serves as evidence of the event described by a news caption. If two captions associated with an image are valid, then they should describe the same event. If they align with the same object(s) in the image, then they should be broadly conveying the same information. Based on these patterns, we define out-of-context (OOC) use of an image as presenting the image as an evidence of untrue and/or unrelated event(s)

Every image in the dataset is accompanied by two related captions. If the two captions refer to same object(s) in the image, but are semantically different, i.e., associate the same subject to different events, this indicates out-of-context (OOC) use of the image. However, if the captions correspond to the same event, irrespective of the object(s) the captions describe, this is defined as not-out-of-context (NOOC) use of the image.

In this task, the participants are asked to come up with methods to detect conflicting image-caption triplets, which indicates miscontextualization. More specifically, given (Image,Caption1,Caption2) triplets as input, the proposed model should predict corresponding class labels (OOC or NOOC). The end goal for this task is not to identify which of the two captions is true/false, but rather to detect the existence of miscontextualization. This kind of a setup is considered particularly useful for assisting fact checkers, as highlighting conflicting image-caption triplets allows them to narrow down their search space.

A NOOC scenario from Task 1 makes no conclusions regarding the veracity of the statements. In a practical scenario, multiple captions might not be available for a given image. In such a scenario, the task boils down to figuring out whether a given caption linked to the image is genuine or not. We argue that this is a challenging task, even for human moderators, without prior knowledge about the image origin. Luo et al. verified this claim with a study on human evaluators who were instructed not to use search engines, where the average human accuracy was around 65%.

In this task, the participants are asked to come up with methods to determine whether a given (Image,Caption) pair is genuine (real) or falsely generated (fake). Since our dataset only contains real, non-photoshopped images, it is suitable for a practical use case and challenging at the same time.